Who knew that an unlikely friendship and a few games of cricket with one of the greatest mathematicians in the early 20th Century could lead to a breakthrough in population genetics?

Today, it is almost commonplace for us in the scientific community to accept the influence natural selection and Mendelian genetics have on one another, however for the majority of human history this was not the case. Up until the early 1900s, many scientists believed that these concepts were nothing more than two opposing and unassociated positions on heredity. Scientists were torn between a theory of inheritance (a.k.a. Mendelian genetics) and a theory of evolution through natural selection. Although natural selection could account for variation, which inheritance could not, it offered no real explanation on how traits were passed on to the next generation. For the most part, scientists could not see how well Mendel’s theory of inheritance worked with Darwin’s theory of evolution because they did not have a way to quantify the relationship. It was not until the introduction of the theorem of genetic equilibrium that biologists acquired the necessary mathematical rigor to show how inheritance and natural selection interacted. One of the men who helped provide this framework was G.H. Hardy.

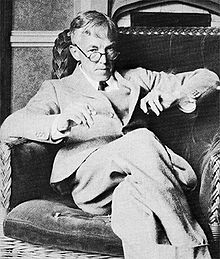

Godfrey Harold (G.H.) Hardy was a renowned English mathematician who lived between 1877-1947 and is best known for his accomplishments in number theory and for his work with the another great mathematician, Srinivasa Ramanujan. For a man who was such an outspoken supporter of pure mathematics and abhorred any practical application of his work[5], it is ironic that he should have such a powerful influence on a field of applied mathematics and help shape our very understanding of population genetics.

How did a pure mathematician come to work on population genetics? Well it all started with a few games of cricket. Whilst teaching at the University of Cambridge, Hardy would often interact with professors in other departments through friendly games of cricket and evening common meals [1]. It was through these interactions that Hardy came to know Reginald Punnett, cofounder of the genetics department at Cambridge and developer of Punnett Squares, which are named for him, and developed a close friendship with him[13].

Punnett, being one of the foremost experts in population genetics, was in the thick of the debate over inheritance vs. evolution. His interactions with contemporaries like G. Udny Yule, made him wonder why a population’s genotype, or the genes found in each person, did not eventually contain only variations, known as alleles, of a particular gene that are dominant. This was the question he posed to Hardy in 1908, and Hardy’s response was nigh on brilliant. The answer was so simple that it almost seemed obvious. Hardy even expressed that “I should have expected the very simple point which I wish to make to have been familiar to biologists’’ [4]. His solution was so simple in fact that unbeknownst to him, another scientist had reached the same conclusion around the same time in Germany [17]. In time, this concept would be known as Hardy-Weinberg Equilibrium (HWE).

In short, HWE asserts that when a population is not experiencing any genetic changes that would cause it to evolve, such as genetic drift, gene flow, selective mating, etc., then the allele (af) and genotypic frequencies (gf) will remain constant within a given population (P’). To calculate the gf for humans, a diploid species that receives two complete sets of chromosomes from their parents, we simply look at the proportion of genotypes in P’.

0 < gf < 1

To calculate the af, we look at the case where either the gene variation is homozygous and contains two copies of the alleles (dominant—AA || recessive—aa) or heterozygous and only has one copy of each allele (Aa). P’ achieves “equilibrium” when these frequencies do not change.

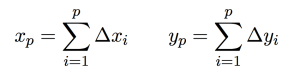

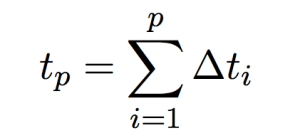

Hardy’s proof of these constant frequencies for humans, a diploid species that receives two complete sets of chromosomes from its parents, is as follows[1][4]:

If parental genotypic proportions are p AA: 2q Aa: r aa, then the offspring’s would be (p + q)2: 2(p + q)(q + r): (q + r)2. With four equations (the three genotype frequencies and p + 2q + r = 1) and three unknowns, there must be a relation among them. ‘‘It is easy to see that . . . this is q2 = pr”

Which is then broken down as:

q =(p + q)(q + r) = q(p + r) + pr + q2

Then to:

q2 = q(1- p – r) – pr = 2q2 – pr ——-> q2 = pr

In order to fully account for the population, the gf and af must sum to 1. And, since each subsequent generation will have the same number of genes, the frequencies remain constant and follows either a binomial or multinomial distribution.

One important thing to keep in mind, however, is that almost every population is experiencing some form of evolutionary change. So, while HWE shows that the frequencies don’t change or disappear, it is best used as a baseline model to test for changes or equilibrium.

When using the Hardy-Weinberg theorem to test for equilibrium, researchers divide the genotypic expressions into two homozygous events: HHο and hhο. The union of each event’s frequency ( f ), is then calculated to give the estimated number of alleles (Nf). In this case, the expression for HWE could read something like this:

Nf = f(HHο) ∪ f(hhο)

However, another way to view this expression is to represent the frequency of each homozygous event as single variable, i.e. p and q. Using p to represent the frequency of one dominant homozygous event (H) and q to represent the frequency of one recessive homozygous event (h), gives the following: p = f(H) and q = f(h). It then follows that p² = f(HHο) and q² = f(hhο). By using the Rule of Addition and Associative Property to calculate the union of the two event’s frequencies, we are left with F = (p+q)². Given that the genotype frequencies must sum to one, the prevailing expression for HWE emerges when F is expanded:

F = p² +2pq + q² = 1

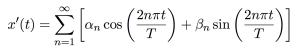

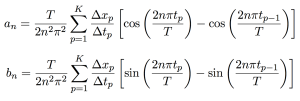

Using this formula, researchers can create a baseline model of P’ and then identify evolutionary pressures by comparing any subsequent frequencies of alleles and genotypes (F∝) to F. The data can then be visually represented as a change of allele frequency with respect to time.

HWE represents the curious situation that populations experience when their allele frequencies change. This situation is realized by first assuming complete dominance, then calculating the frequency of alleles, and then using the resultant number as a baseline with which to compare any subsequent values. Although there are some limitations on how we can use HWE—namely, identifying complete dominance, the model is very useful in identifying any evolutionary pressures a population may be experiencing and is one of the most important principles in population genetics. Developed, in part, by G.H. Hardy, it connected two key theories: the theory of inheritance and the theory of evolution. Although, mathematically speaking, his observation/discovery was almost trivial, Hardy provided the mathematical rigor the field sorely needed in order to see that the genotypes didn’t completely disappear and, in turn, forever changed the way we view the fields of biology and genetics.

Sources:

- Edwards, A. W. F. “GH Hardy (1908) and Hardy–Weinberg Equilibrium.”Genetics3 (2008): 1143-1150.

- Edwards, Anthony WF. Foundations of mathematical genetics. Cambridge University Press, 2000.

- Guo, Sun Wei, and Elizabeth A. Thompson. “Performing the exact test of Hardy-Weinberg proportion for multiple alleles.” Biometrics(1992): 361-372.

- Hardy, Godfrey H. “Mendelian proportions in a mixed population.” Science706 (1908): 49-50.

- Hardy, G. H., & Snow, C. P. (1967). A mathematician’s apology. Reprinted, with a foreword by CP Snow. Cambridge University Press.

- Pearson, Karl. “Mathematical contributions to the theory of evolution. XI. On the influence of natural selection on the variability and correlation of organs.”Philosophical Transactions of the Royal Society of London. Series A, Containing Papers of a Mathematical or Physical Character(1903): 1-66.

- Pearson, K., 1904. Mathematical contributions to the theory of evolution. XII. On a generalised theory of alternative inheritance, with special reference to Mendel’s laws. Philos. Trans. R. Soc. A 203 53–86.

- Punnett, R. C., 1908. Mendelism in relation to disease. Proc. R. Soc. Med. 1 135–168.[PMC free article] [PubMed]

- Punnett, R. C., 1911. Mendelism. Macmillan, London.

- Punnett, R. C., 1915. Mimicry in Butterflies. Cambridge University Press, Cambridge/London/New York.

- Punnett, R. C., 1917. Eliminating feeblemindedness. J. Hered. 8 464–465.

- Punnett, R. C., 1950. Early days of genetics. Heredity 4 1–10.

- Snow, C. P., 1967. G. H. Hardy. Macmillan, London.

- Stern, C., 1943. The Hardy–Weinberg law. Science 97 137–138. [PubMed]

- Sturtevant, A. H., 1965. A History of Genetics. Cold Spring Harbor Laboratory Press, Cold Spring Harbor, NY.

- Weinberg, Wilhelm. “Über vererbungsgesetze beim menschen.” Molecular and General Genetics MGG1 (1908): 440-460.

- Weinberg, W. “On the demonstration of heredity in man.” Boyer SH, trans (1963) Papers on human genetics. Prentice Hall, Englewood Cliffs, NJ(1908).

Figure: Wikimedia Commons

![[Fig.2] Proof by Euclid](https://li382026440.files.wordpress.com/2015/05/jxhsffrzgkns93z983tpr0.png?w=262&h=300)