A breakdown of time Americans spend with electronic media. Image: Courtesy of http://www.statista.com/chart/1971/electronic-media-use, Felix Richter

We live in an era where the internet is king. Between our cellphones, tablets, game consoles, laptops, and other devices, the average American adult (18+) spends 11 hours per day ingesting electronic media in some way, shape, or form. I’m sure we can all admit that on a weekly basis we access or create data that we don’t necessarily want the public to see. Whether it be our bank account or credit card information, our Facebook interactions, our emails, our tweets, our PayPal activity, or even our browsing history. That being said, I’m sure some of us take our internet privacy for granted; but how exactly does are internet privacy remain… private? The answer is simple: modular arithmetic. More specifically, cryptographic algorithms.

A History of Cryptography

Cryptography dates back to Egyptian scribes in 1900 B.C., and it was first used in their hieroglyphs. The Egyptians presumably wanted hide the content of their hieroglyphs from others, and they used very basic cryptography to do so. As you can imagine, this whole “keeping a message’s content safe” idea would become widely popular as mankind become more and more intelligent. The Romans, specifically Julius Caesar himself, created the first truly math-oriented cryptography. He used it primarily to protect messages of military significance. Caesar’s cryptographical ideas would later be used to build out modern day cryptography.

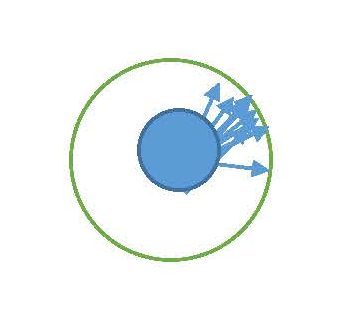

There are two main types of cryptography widely used across the web today: symmetric-key encryption, and asymmetric-key encryption (we’ll go into details later, I promise!). Both of these types of encryption rely on modular arithmetic. We must give credit where credit is due. Friedrich Gauss (1777-1855), birthed modular arithmetic in 1801. Believe it or not, this famous mathematician made most of his breakthroughs in his twenties! For those that aren’t familiar with modular arithmetic, here’s a timeless example (pun intended, wait for it…). The length of a linear line can have a start and end point, or it can go on to infinity in either direction. In modular arithmetic, the length of a “circular” number line is called the modulus. To actually do the arithmetic, consider this example: Take a regular clock (see, here’s the pun!), consisting of the numbers 1-12 . Clocks measure time on a 12 hour time table before starting back over at 1. The modulus for a 12-hour clock is 12 because it has 12 different numbers for the number of hours. To actually do the arithmetic, take this for example: It’s 8PM and we want to add 9 hours (8 + 9 mod 12). 8 +9 equals 17, however when using a modulus of 12, our number line wraps back around after counting to 12. For this we would count forward from 8 – ie. 8, 9 ,10, 11, 12, 1, 2, 3, 4, 5. So, (8 + 9 mod 12) = 5 AM in this case.

Caesar Cipher

As I said above, the Caesar cipher has acted as a building block for some of our modern day cryptography. Caesar’s main encryption step is incorporated in some of the more complex schemes we still rely on today. However, the Caesar cipher can be easily broken, or decrypted (more on this soon!). This particular cipher is concerned with the alphabet. The theory behind it is replacing each letter in the alphabet with a different letter some fixed number of positions down the alphabet (this is reffered to as the shift). For instance, with a shift of 3, A would replace D, and B would replace E.

Original: ABCDEFGHIJKLMNOPQRSTUVWXYZ

Cipher: XYZABCDEFGHIJKLMNOPQRSTUVW

This can be represented mathematically using modular arithmetic. The encryption of any letter ‘x” by a shift ‘n’ can be described as follows:

Encryption:

E(x) = (x + n) mod 26

Decryption:

D(x) = (x – n) mod 26

Brute-Force Attacking:

This cipher is extremely easy to break. There are only 26 possible shifts (26 different english letters). When taking a brute-force approach, it’s only a matter of varying through the different shifts until the message is decrypted. In fact, this process could be optimized by analyzing the encrypted string, finding frequently used letters and associating them with common vowels. That way, you could brute force using intelligent shifts. However, this approach would have to be modified when switching between languages.

Cryptography Online

As promised, I will explain the two types of internet cryptography. First, we have symmetric-key cryptography. This is based on the concept that both communicating parties share the same key for encryption as well as decryption. This key is mathematically applied to a numerical equivalent of the data each party is encrypting/decrypting. It is imperative that this key is kept secret. If another party finds out what the key is, none of the encrypted data is safe anymore. Symmetric-key cryptography uses either stream ciphers (encrypt the numerical representation of the data one digit at a time.), or block ciphers (taking blocks of digits, and encrypting them as a whole). Symmetric-key algorithms have an advantage over asymmetric in that they require less computational power.

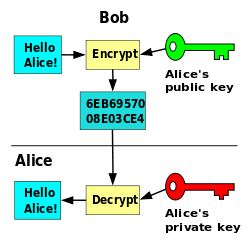

Asymmetric-Key encryption. Anyone can encrypt data using the public key, but the data only be decrypted with the private key. Image: Dave Gothenburg, via Wikimedia Commons.

As for Asymmetric-key cryptography (aka public-key cryptography) we use a slightly different approach. This cryptosystem implements both a private and public key. The public key is used to do the encryption (just like symmetric key cryptography), but the private key is used to do the decryption. The word “asymmetric” stems from the different keys performing opposite functions. This type of cryptosystem is more user friendly, and requires less administration. This is why public-key cryptography is widely implemented across the web.

The RSA Cryptosystem

The RSA cryptosystem is one of the most practical applications of modular mathematics we see today. In fact, if you look at your browser’s address bar right now and you see an “https” at the beginning of your URL, you’re more than likely relying on an RSA encryption to keep your data secure. RSA was created was created in 1977 by Ron Rivest, Adi Shamir, and Leonard Adleman at MIT. As far as cryptosystems are concerned, RSA in particular is one of the most straightforward to visualize mathematically. This algorithm consists of three parts: key generation, encryption, and decryption. I will be walking through a widely-used example using 3, and 11. Not only does this process use Gauss’s modular arithmetic, it also uses Euler’s totient function φ(n). (A function that counts the totatives of n – the positive integers less than or equal to n that are relatively prime to n.)

Generating the key is the most confusing part, but here’s a somewhat simplified version (don’t get nauseous!):

- Randomly pick 2 prime numbers p and q : p=3 q=11

- Calculate the modulus : n = p * q -> 3 * 11 = 33

- Calculate the totient φ(n) : z = (p – 1) * (q – 1) -> ( 3 – 1) * (11 – 1) = 20

- Choose a prime number k, such that k is co-prime to z : k=7

- n and k become the public key

- Calculate the private key : k * j = 1 mod z | 7 * j = 1 mod 20

- In the previous step, we’re only interested in the remainder. Since we’re working with small numbers here, we can say –> 21/20 gives us “some number” with a remainder of 1. Therefore – 7 * j = 21 -> j = 3

- j becomes the private key

After the public and private keys are generated, encryption and decryption become easy! Given P is the data we’d like to encrypt and E is the encrypted message we want to generate:

P^k = E (mod n) – When P (data we’d like to encrypt) = “14” We get: 14^7 = E mod 33 So E=20

Given E is the encrypted data we’ve received, and P is the data we want to decrypt:

E^j = P ( mod n) – 20^3 = P mod 33 So P = 14

This proves RSA works!

Does the RSA Cryptosystem Really Keep Me Safe?

Theoretically, a hacker could factor the modulus “n” in the steps above. Given the ability to recover the prime factors p and q an attacker can compute the “secret exponent” “d” from the public key (n, e). Once the hacker has this “secret exponent”, they can decrypt all data sent with its matching public key. RSA keeps us safe from hackers because there is no known algorithm (The NSA probably has one!) that can factor these large integers in a timely manner. In fact, the largest known number ever factored was 768 bits (232 digits!) long, and this was done with a supercomputer using a state-of-the-art implementation. If that doesn’t make you feel safe enough, RSA keys are typically 1024 to 2048 bits (617 digits!) long, so we don’t need to worry about our data getting hijacked. However it is recommended that we use a value of n that is at least 2048 bits long to ensure the encryption is never cracked.

Sources:

http://en.wikipedia.org/wiki/RSA_(cryptosystem)

http://blogs.ams.org/mathgradblog/2014/03/30/rsa/

http://www.studentpulse.com/articles/41/a-brief-history-of-cryptography

http://www.ti89.com/cryptotut/mod_arithmetic.htm

http://en.wikipedia.org/wiki/Modular_arithmetic

http://cunymathblog.commons.gc.cuny.edu/

How and Why RSA works: